How to improve GoogleMaps incident reporting with voice interactions

Google pushes the development of the Google Maps mobile app in the last few months. I noticed this for the incident reporting. It’s not only possible to report incidents, crashes, traffic jams, or obstacles on the street, but also reporting whether a reported incident is still present. Google is able to collect such a large amount of data through Google Maps users they can use to predict and show real-time traffic (red / yellow polyline)1. Once again, providing a “free” product works. The user is paying with their (traffic) data2.

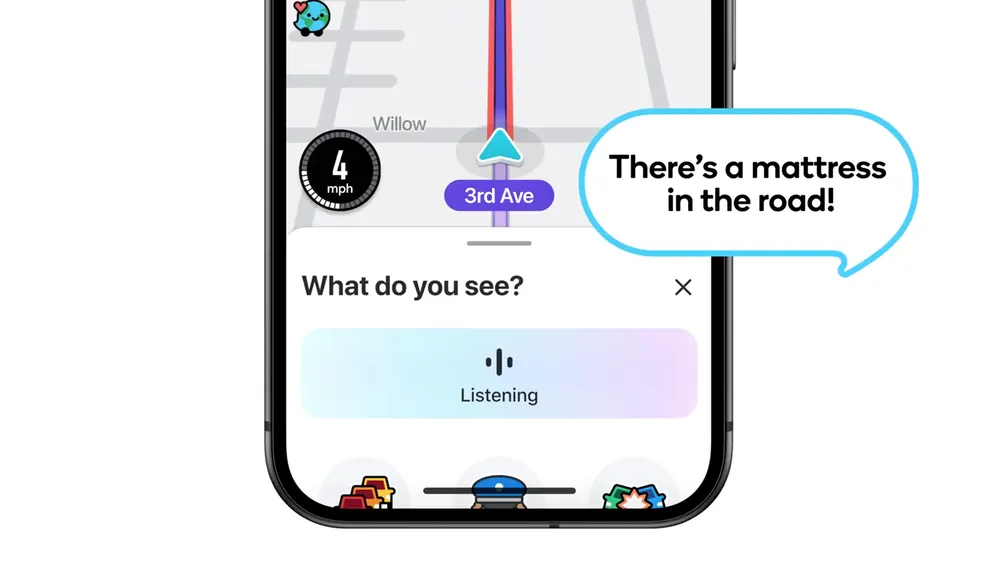

With these features, Google Maps follows more and more Waze with their navigation and traffic app and their great traffic reporting tooling. Some people even think that at one point Waze will lose their market position and will be bought by a competitor like Google. Last October, Waze introduced voice incident reporting. This lets user report incidents using natural language aka their voice while driving without interacting and looking at their phone after initiating the incident reporting process. With the advancement of LLMs this is a simple next step. There is now almost no natural language processing involved, the mapping of the voice input to a category can be as simple as:

- Use STT (Speech-to-text) to transcribe the user input

- Input sanitization

- Let a LLM map the text input to an incident category, icon and description

- Verify output to filter abuse by using either another LLM or keyword matching.

Now any user can now report any (valid) category. The implementation overhead is manageable.

The current feature implementation in the Google Maps app increases the risk of drivers being distracted by their phone. Google should push forward to provide a similar voice integration. I would really like to know how many drivers are reporting incidents themself and not their passengers. While this is something that Google probably doesn’t know as an app developer, it is something that might be knowable by the OS itself. A tap gesture could provide information how the tap occurred. I can imagine that a finger touching the screen from the left produces a slightly different input compared to a finger tapping the screen from the right due to the different angle. This could then be used to at least provide some kind of estimate if a left or right hand tapped the screen which in turn can be used to know whether it was the driver or front passenger.

Google should not only try to implement voice interactions for the initial incident reporting but also for the verifying of an incident (“is the incident still present?”) for following cars. When reaching a reported incident, short information via audio output is made together with an overlay that lets the user select whether the incident is still present or not (YES / NO). After a few seconds the overlay is automatically dismissed to not further distract the user. Even as a passenger, it is complicated for me to exactly know what happens after the automatic dismiss and which of the two buttons is used for what. Sometimes I’m unable to read the text inside the overlay. Instead, implementing this as a voice interacting is safer and provides a better UX. This could also help increase the participation of users / drivers that not interact directly with the phone while driving. The process can be as follows:

- Let the driver know that there’s a reported incident ahead: “There’s an obstacle reported on the right line. Is this still present?”

- Google Maps app listens for audio input: “Yes, there is.” / “No” / “I don’t know”3

- The answer is transcribed once again to text and evaluated

No need to design and integrate an overlay. Hopefully, this will further improve, and voice integration becomes better in the near future.

Even though (at least in the past) it is possible to produce a phantom traffic jam: https://www.youtube.com/watch?v=k5eL_al_m7Q ↩︎

This reminds me of the early days of the Apple App Store when there was a TomTom navigation app available for almost 90€. The product changed over time. (Big) data is now more valuable and users are more likely to use an app that is available “for free”. ↩︎

It might not be possible to access the microphone without prior user interaction. It might be the case that the user needs to initiate the microphone usage by tapping a button. This could bring this feature to a stop. ↩︎